LogoRA: Local-Global Representation Alignment for Robust Time Series Classification (TKDE 2024)

We propose the Local-Global Representation Alignment framework (LogoRA), which combines multi-scale convolutional and transformer encoders, integrates representations with a fusion module, and employs advanced alignment strategies, achieving state-of-the-art performance on four time-series datasets.

We propose the Local-Global Representation Alignment framework (LogoRA), which combines multi-scale convolutional and transformer encoders, integrates representations with a fusion module, and employs advanced alignment strategies, achieving state-of-the-art performance on four time-series datasets.Abstract

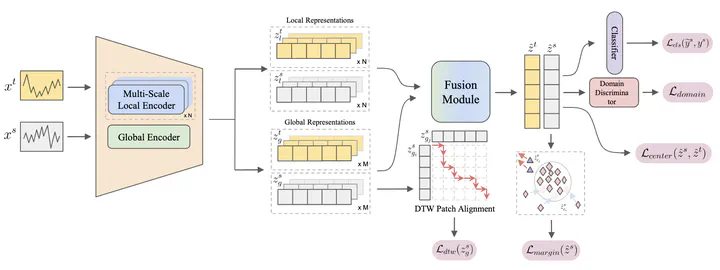

Unsupervised domain adaptation (UDA) of time series aims to teach models to identify consistent patterns across various temporal scenarios, disregarding domain-specific differences, which can maintain their predictive accuracy and effectively adapt to new domains. However, existing UDA methods struggle to adequately extract and align both global and local features in time series data. To address this issue, we propose the Local-Global Representation Alignment framework (LogoRA), which employs a two-branch encoder–comprising a multi-scale convolutional branch and a patching transformer branch. The encoder enables the extraction of both local and global representations from time series. A fusion module is then introduced to integrate these representations, enhancing domain-invariant feature alignment from multi-scale perspectives. To achieve effective alignment, LogoRA employs strategies like invariant feature learning on the source domain, utilizing triplet loss for fine alignment and dynamic time warping-based feature alignment. Additionally, it reduces source-target domain gaps through adversarial training and per-class prototype alignment. Our evaluations on four time-series datasets demonstrate that LogoRA outperforms strong baselines by up to 12.52%, showcasing its superiority in time series UDA tasks.

Type

Publication

IEEE Transactions on Knowledge and Data Engineering

We propose the Local-Global Representation Alignment framework (LogoRA), which combines multi-scale convolutional and transformer encoders, integrates representations with a fusion module, and employs advanced alignment strategies, achieving state-of-the-art performance on four time-series datasets.